Improvement for Feature Expression

inverted residual with linear bottleneck

ReLU6 、 ReLU、PReLU

Acceleration and Compression

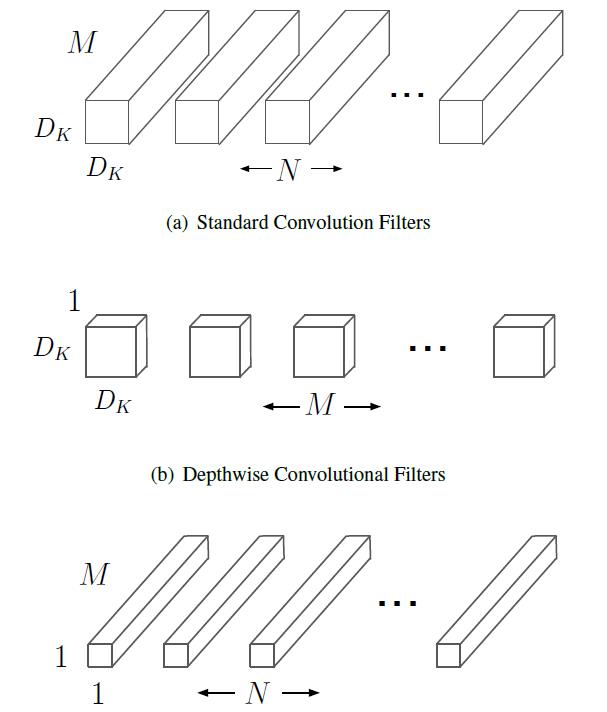

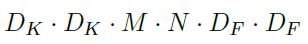

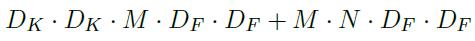

Depthwise Separable Convolution

computational cost reduce from

to

Group Convolution

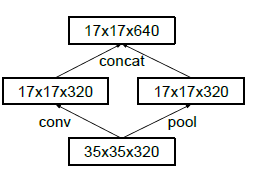

from 35x35x320 to 17x17x640:

- conv+pooling or pooling + conv

- conv with stride 2

remain to study which is faster between 2 and 3.

Inspired Architecture

Insight

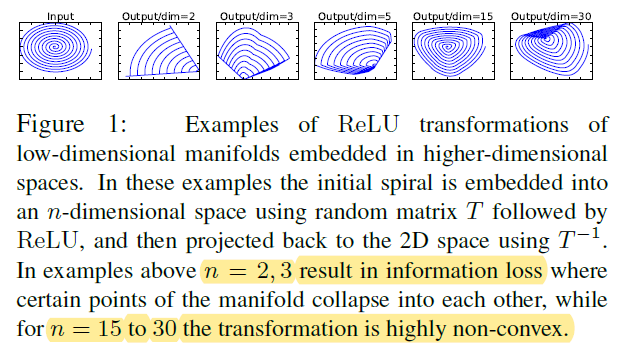

Manifold of interest should lie in a low-dimensional subspace of the higher-dimensional activation space (non-linearity destroys information in low-dimensional space.)